Data Connection

A high-impact project centered on modernizing ETL (Extract, Transform, Load) pipelines using Databricks. By leveraging AI-enabled tools, the solution dramatically cut down on manual data operations, ensuring clean, transformed data was rapidly and reliably loaded into the core software platform.

The Vision

The vision for the Dataflow Integration (DFI) project was to revolutionize the way customer data is ingested, processed, and utilized, transforming a complex, manual process into a seamless, AI-powered, customer-controlled pipeline.

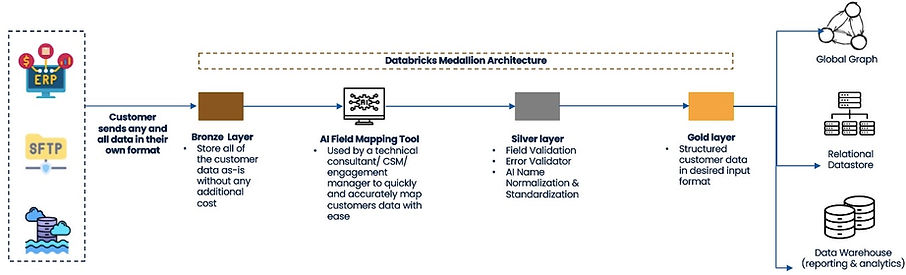

The PM Architecture

The DFI project was a direct response to customer demand for frictionless, multi-source data ingestion. The architecture proposed to leverages AI-enabled tools to automate data structuring and standardization, eliminating the customer's preprocessing burden. This intelligent pipeline autonomously manages quality control, ensuring clean, ready-to-use data was seamlessly loaded into the software with no manual intervention required.

The PM Approach

The PM approach for the Data Integration project executed a phased, incremental rollout that successfully scaled system connectivity and throughput.

The Minimum Viable Product (MVP) established the core automated pipeline: data was ingested via a Secure File Transfer Protocol (SFTP) and flowed through AI-Powered Tools (for automated data mapping and normalization), into the Data Processor/Validator, and finally into the Final Data Store, validating core functionality using an on-demand process.

Following the MVP, Phase 2 immediately scaled ingestion capabilities by integrating the platform with complex, structured enterprise sources like ERP systems and Data Warehouses, requiring enhancements to handle system-to-system data pulls. Phase 3 completed the vision by extending support to high-volume, unstructured sources like Object Storage, transforming the solution from a basic file processor into a universal, high-throughput data integration layer for the business.

PM x Engineering x Data Science - Agile Development

The successful execution of the Dataflow Integration (DFI) project was driven by a tight, iterative Agile collaboration model involving Product Management, Engineering, and Data Science.

-

As the lead AI product manager I led the strategic definition and validation of the entire pipeline. My responsibilities included defining the "Human-in-the-Loop" concept, translating core customer needs (like "zero pre-ingestion structuring") into clear requirements, and critically, defining the Data Science model's requirements. This involved setting the initial accuracy and precision targets for the AI Field Mapping and Name Standardization Engines, establishing the necessary training data inputs, and validating the model's output to ensure it met the final business utility and quality standards.

-

Engineering: Built the resilient, scalable three-layer pipeline architecture (Bronze --> Silver --> Gold) and owned the development of all integration modules (SFTP, API connections, etc.), ensuring the pipeline's performance and successful data delivery into the Final Data Store.

-

Data Science: Provided the core intelligence by developing and integrating the AI Field Mapping and Name Standardization Engines. DS ensured model accuracy and managed the error/correction logic, providing the automated data quality assurance layer required for the solution's success.

Problem Solving & Edge Cases

I led cross-functional workshops to define technical solutions and resolve ambiguities across Engineering and Data Science. My key contribution was systematically defining all project edge cases and failure modes (e.g., data format failures, API latency) and documenting the required system response for each. This process ensured the pipeline was fault-tolerant and robust, enabling rapid stakeholder alignment on critical solution trade-offs without compromising project velocity.

Result

In a collaborated manner we successfully launched a strategic data integration platform that scaled data throughput by 300%, moving from file-based processing to robust enterprise system integration. This resulted in a ~25% reduction in manual data onboarding effort for the professional services team and achieved consistent, verifiable data quality (meeting a 95% normalization accuracy target). By delivering a universal data layer, the product now supports new revenue streams and complex analytical use cases across the entire customer base.

Wireframes & Concepts